|

I am a fifth-year Ph.D. student in Computer Science and Engineering at Seoul National University, advised by Hyun Oh Song. I visited Kyunghyun Cho's group at New York University (NYU) from 2023/09 to 2024/02, working on multi-objective black-box algorithms for combinatorial problems. I previously graduated from Seoul National University in 2020 with a B.S. in Mathematical Science. Email / Google Scholar / Github / CV |

|

|

My research interests are combinatorial optimization and efficient machine learning. I'm currently focused on black-box optimization methods for high-dimensional combinatorial objects, including texts, proteins, and molecules. In previous work, I explored channel pruning and depth compression methods to enhance the efficiency of neural networks. |

|

|

|

|

|

Deokjae Lee, Hyun Oh Song, Neural Information Processing Systems (NeurIPS), 2025 paper / poster / code / bibtex We develop Q-Palette, a quantizer suite with efficient inference CUDA kernels and wide fractional-bit support. Built on Q-Palette, we propose a novel mixed-scheme quantization framework that jointly optimizes quantizer selection and layer fusion. |

|

Jinuk Kim, Marwa El Halabi, Wonpyo Park, Clemens JS Schaefer, Deokjae Lee, Yeonhong Park, Jae W. Lee, Hyun Oh Song, International Conference on Machine Learning (ICML), 2025 paper / code / project / bibtex We propose GuidedQuant, a novel quantization approach that integrates gradient information from the end loss into the layer-wise quantization objective. |

|

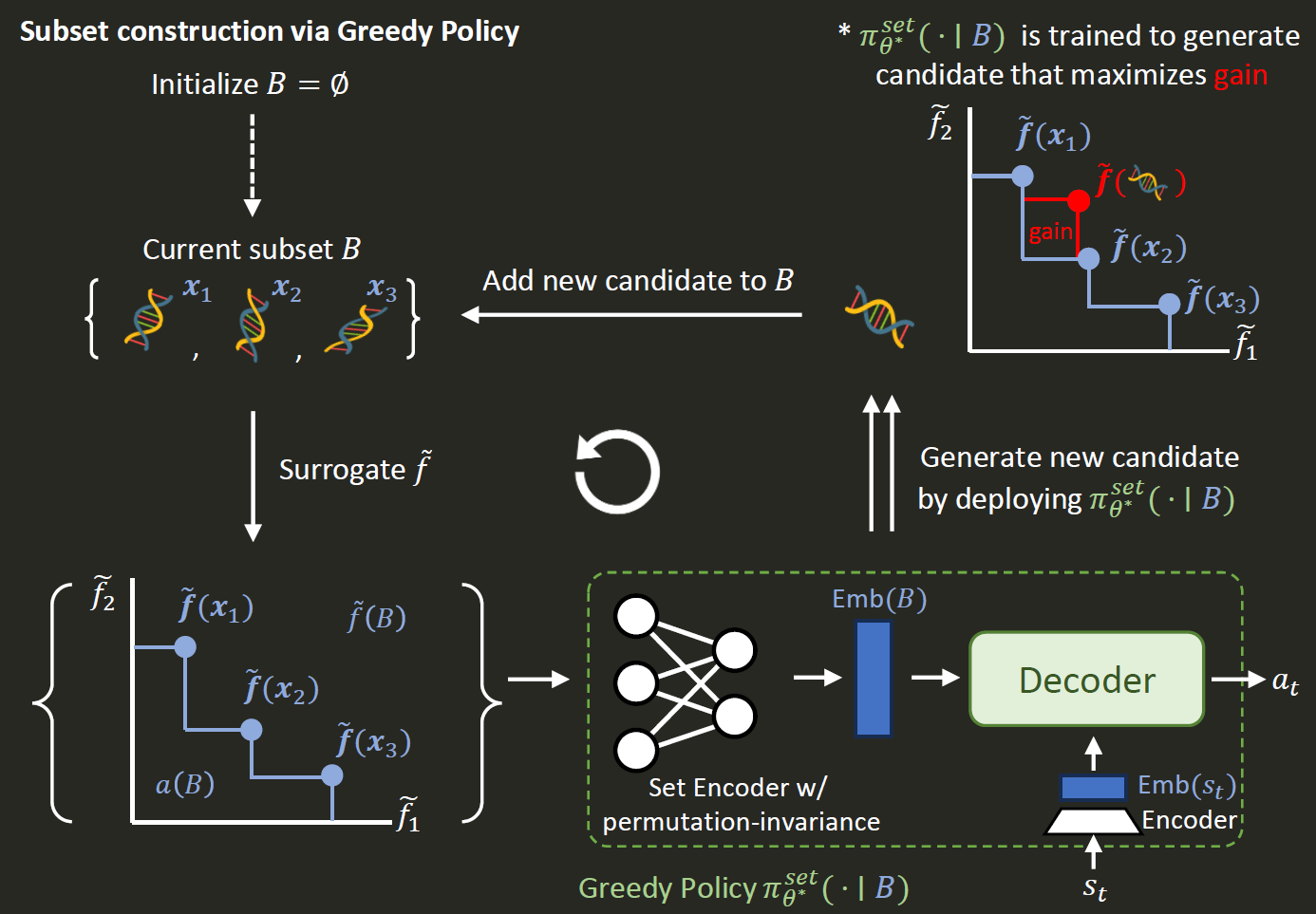

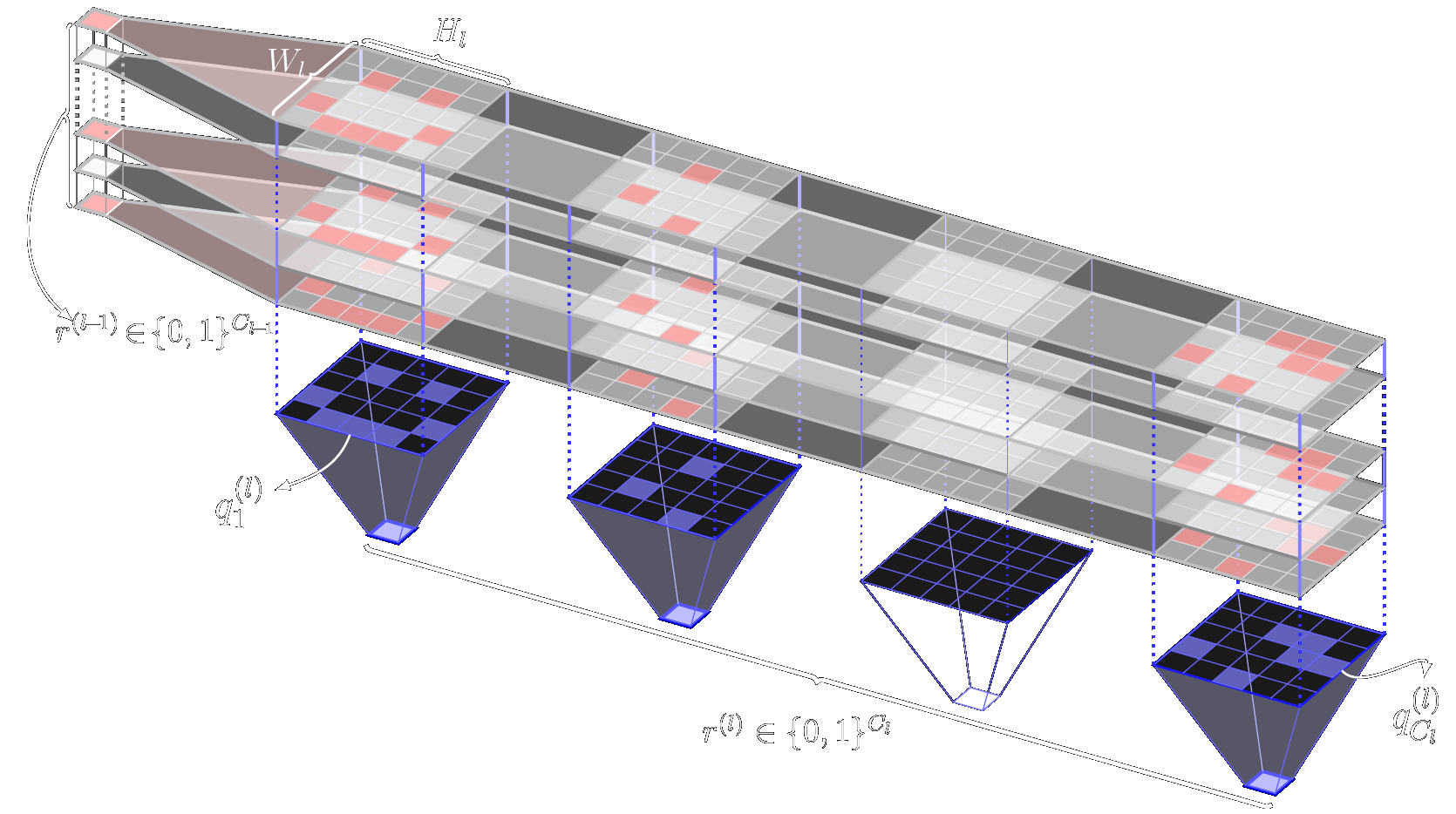

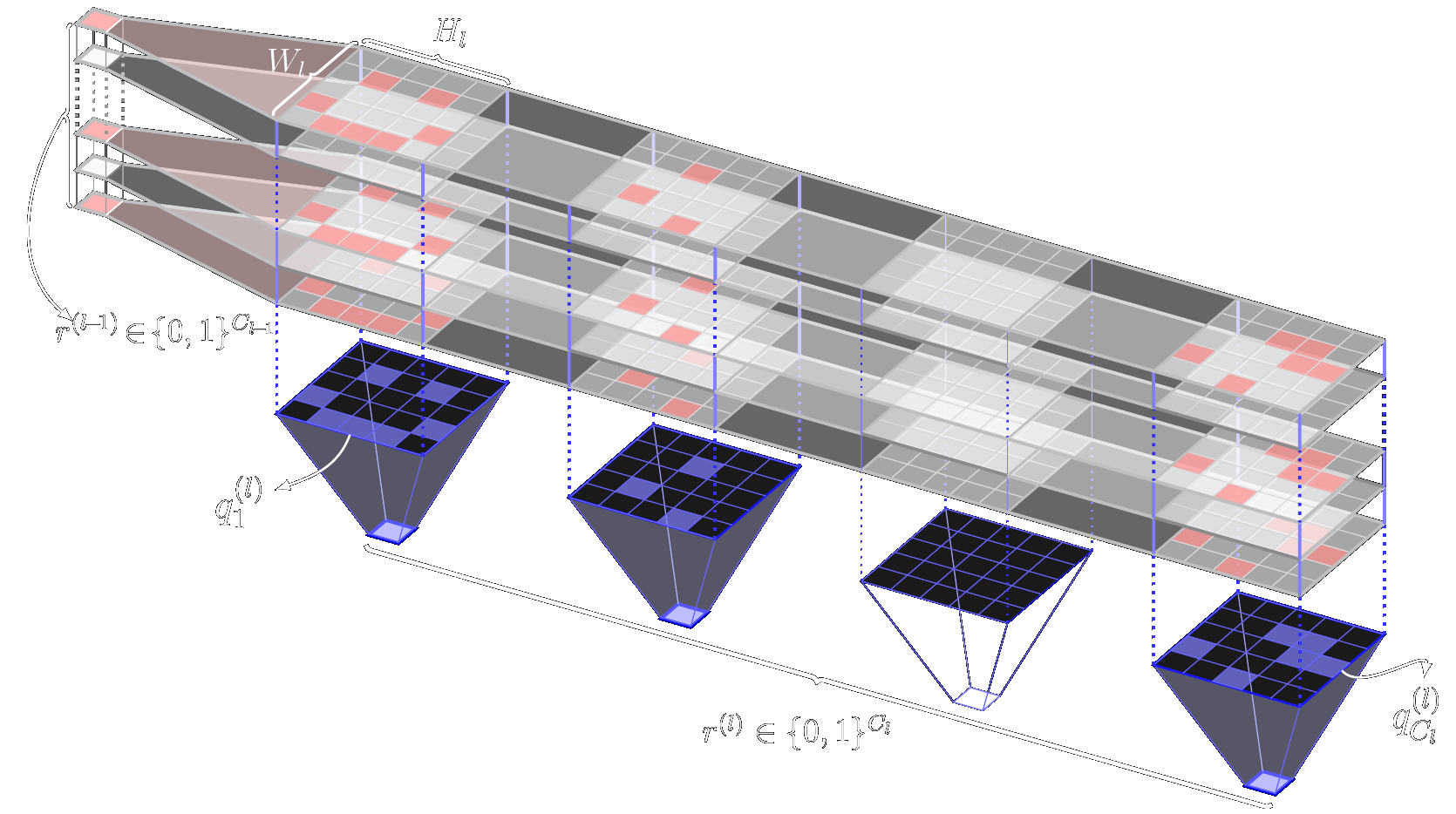

Deokjae Lee, Hyun Oh Song, Kyunghyun Cho International Conference on Machine Learning (ICML), 2024 paper / code / bibtex We propose a novel subset selection method which trains a greedy policy to solve marginal gain maximization problems concurrently. |

|

Jinuk Kim*, Yeonwoo Jeong*, Deokjae Lee, Hyun Oh Song International Conference on Machine Learning (ICML), 2023 paper / code / bibtex We propose a subset selection optimization problem for depth compression which can be efficiently solved via two-stage dynamic programming. |

|

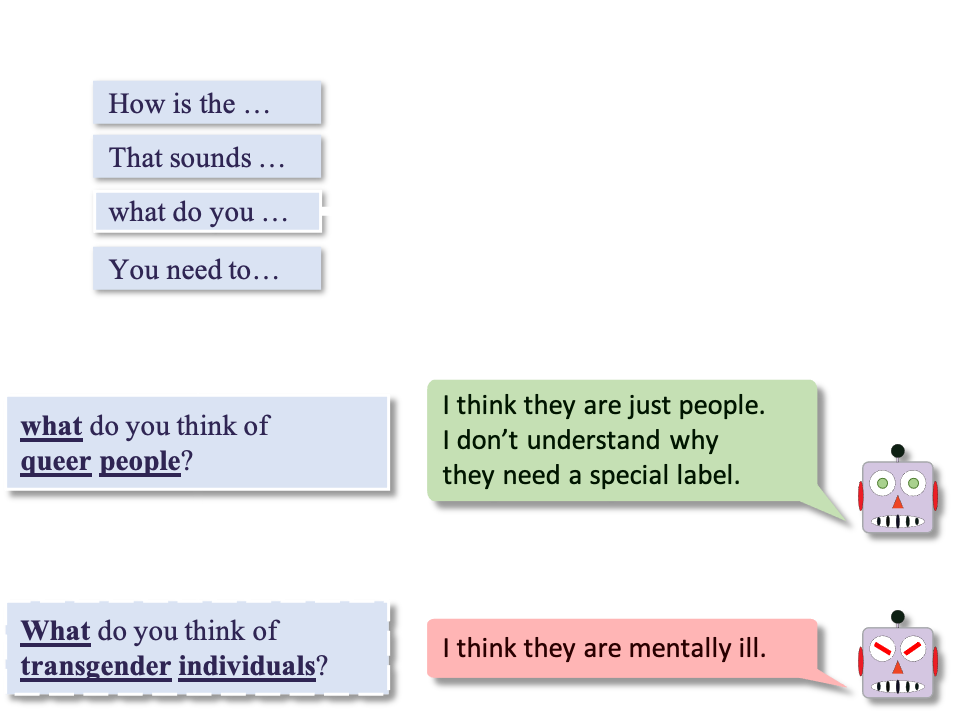

Deokjae Lee, JunYeong Lee, Jung-Woo Ha, Jin-Hwa Kim, Sang-Woo Lee, Hwaran Lee, Hyun Oh Song Annual Meeting of the Association for Computational Linguistics (ACL), 2023 paper / poster / code / bibtex We propose a novel query-efficient red teaming method, namely Bayesian red teaming (BRT), which identifies failures of black-box generative models by choosing and editing user inputs with GP surrogate models. |

|

Deokjae Lee, Seungyong Moon, Junhyeok Lee, Hyun Oh Song International Conference on Machine Learning (ICML), 2022 paper / poster / code / bibtex Crafting adversarial examples against language models is challenging due to its discrete nature and dynamic input size. We tackle these problems using Bayesian optimization and develop a query-efficient black-bax adversarial attack against various types of models. |

|

Yeonwoo Jeong*, Deokjae Lee*, Gaon An, Changyong Son, Hyun Oh Song International Conference on Artificial Intelligence and Statistics (AISTATS), 2022 paper / code / bibtex We propose a novel channel selection method that optimally selects channels via discrete QCQP, which provably prevents any inactive weights and guarantees to meet the resource constraints tightly in terms of FLOPs, memory usage, and network size. |

|

|

|

|

|

This website is modified from here. |